Most call centers already track dozens of KPIs. But with that much data, it’s hard to know which metrics actually matter. When you prioritize the wrong numbers, your team ends up cutting handle time at the expense of resolution, or hitting service level targets while repeat contacts quietly pile up.

Inbound performance is interconnected. A drop in first call resolution increases repeat volume. Higher volume drives longer wait times. Longer wait times drive abandonment and customer dissatisfaction. One metric falling over can knock the rest down with it.

This article covers the inbound metrics that directly affect cost, queue stability, and customer experience, then gets into how to track them in a way that informs decisions, not just populates reports.

Core inbound call center metrics that drive outcomes

Not every KPI deserves equal attention. Some look useful on dashboards but don’t move the needle operationally. Others directly affect queue stability, cost per contact, and how customers feel about calling you.

The metrics below matter because they determine how smoothly inbound demand moves through your system and whether issues get resolved without creating repeat work.

First Call Resolution (FCR)

FCR is probably the single best indicator of whether your inbound operation is working. It measures how often a customer’s issue gets resolved during the first interaction, and when that rate drops, the effects compound fast. Repeat contacts inflate call volume, add queue pressure, and throw off your staffing forecasts.

The tricky part is tracking it. You need clean outcome categories, which means wrap-up codes and call outcomes have to be applied consistently, not just occasionally. If agents use them differently, your data is unreliable before you even start analyzing it. CRM activity logging also helps here. When a caller matches an existing CRM record, you can see when the same person reaches out again within a set window, which gives you a much clearer picture of repeat contact patterns than wrap-up codes alone.

Average Handle Time (AHT)

Here’s the problem with AHT: it’s the metric most likely to be misused.

AHT measures how long an interaction takes from start to finish, including talk time, hold time, and after-call work. It directly affects staffing. If handle time goes up, you need more agents to maintain the same service level. If it goes down, queues move faster, but only if resolution quality holds.

The risk is treating it as a speed target. When teams focus only on shortening calls, first call resolution tends to drop. That creates repeat contacts, which increases total workload. You end up running faster to stay in the same place.

Worth noting: AHT should be monitored at the queue level. Technical support, billing, and general inquiries will naturally have different handle times. Comparing them without that context leads to bad decisions.

Average Speed of Answer (ASA) and Call Abandonment Rate

These two are closely related, so it makes sense to look at them together. ASA measures how long callers wait before reaching an agent. Abandonment rate measures how many give up and hang up before they get there. When ASA climbs, abandonment almost always follows.

Track ASA in short intervals throughout the day, not just as a daily average. A daily number can mask peak-hour congestion entirely. If ASA starts getting unstable, the cause is usually understaffing during certain intervals, unexpected volume spikes, or increased handle time.

Abandonment is a friction signal, but not every abandoned call means a frustrated customer. Separate very short abandons (calls disconnected within a few seconds) from meaningful ones. And like ASA, review it by time interval. Peak-hour abandonment tells a very different story than a daily average.

Together, these two metrics are your early warning system. They tell you when demand and capacity are out of balance before other metrics start to deteriorate.

Customer Satisfaction Score (CSAT)

CSAT (typically collected through post-call surveys) is the one metric on this list that tells you something the others can’t: how the customer actually felt.

But it’s easy to over-rely on. On its own, a CSAT score doesn’t tell you much. Pair it with operational data and it gets more useful:

- Low CSAT + long hold times probably means queue frustration.

- Low CSAT + low FCR probably means unresolved issues.

Response rate matters here. A small sample distorts results fast. Focus on trends over time rather than any single day’s numbers. CSAT fills in gaps that operational metrics can’t, but it doesn’t replace them.

Service Level (SL%) and Agent Occupancy Rate

Service Level is the percentage of calls answered within a defined threshold; the classic example being 80/20 (80% of calls answered within 20 seconds). It’s widely used in SLA-driven environments because it gives you a clear, measurable target.

Agent Occupancy Rate is the percentage of an agent’s available time spent handling interactions. High occupancy looks like productivity on paper, but sustained high occupancy leads to fatigue, lower quality, and higher turnover. People burn out.

These two are worth watching in tandem. High occupancy with declining service level usually means demand is exceeding capacity. Very low occupancy with a strong service level may mean you’ve over-allocated staff. And a strong service level with low FCR can create a false sense of efficiency; you’re answering calls fast, but if customers keep calling back, the operation gets more expensive underneath.

Neither metric exists in a vacuum. Service level is shaped by AHT, staffing levels, and call arrival patterns. Occupancy only makes sense alongside service level and handle time. The goal with both is balance, not maximization.

How these metrics interact (and why tracking them in isolation fails)

Inbound metrics don’t operate independently. They influence each other, sometimes in ways that aren’t obvious until the damage is done.

When teams optimize one metric without watching the others, friction just shifts elsewhere in the system.

A few common patterns:

- Lower AHT without maintaining FCR: You push agents to shorten calls. Calls end faster, but they don’t get resolved. Customers call back. Repeat contacts increase total volume, which puts pressure right back on the queue.

- Higher occupancy over long periods: Agents spend nearly all their time on calls. Fatigue builds. Mistakes increase, conversations feel rushed, CSAT drops. Over time, attrition goes up too.

- High service level with low FCR: You’re answering calls fast, but not solving problems. Customers call back. The operation looks fast on the surface but gets more expensive underneath.

Inbound performance is a system. Improving one metric while ignoring the rest often just creates hidden costs.

Here’s a simplified view of how these tradeoffs typically play out:

| If you push this metric | Without watching | Likely result |

| Lower AHT | FCR | More repeat contacts, higher total volume |

| Higher Occupancy | CSAT, Error rate | Agent fatigue, quality decline |

| Higher Service Level | FCR | Faster answers, but unresolved issues increase cost |

| Lower Staffing | ASA, Abandonment | Longer queues, more dropped calls |

How to track inbound metrics with operational accuracy

Tracking metrics is more than putting numbers on a dashboard. Here’s an example: your service level shows 85%. That sounds healthy. But if first call resolution is falling, repeat contacts may be quietly increasing total workload. The surface metric looks stable while underlying friction grows.

Accurate tracking means the data is clean, consistent, and reviewed in context. Without that, you get false confidence instead of clear decisions.

Here’s how to approach it.

Use real-time dashboards

Supervisors need to see what’s happening now, not just what happened yesterday or last week.

Real-time dashboards let teams monitor:

- Active calls

- Queue volume

- Average Speed of Answer

- Abandonment rate

- Agent availability

- Service level performance

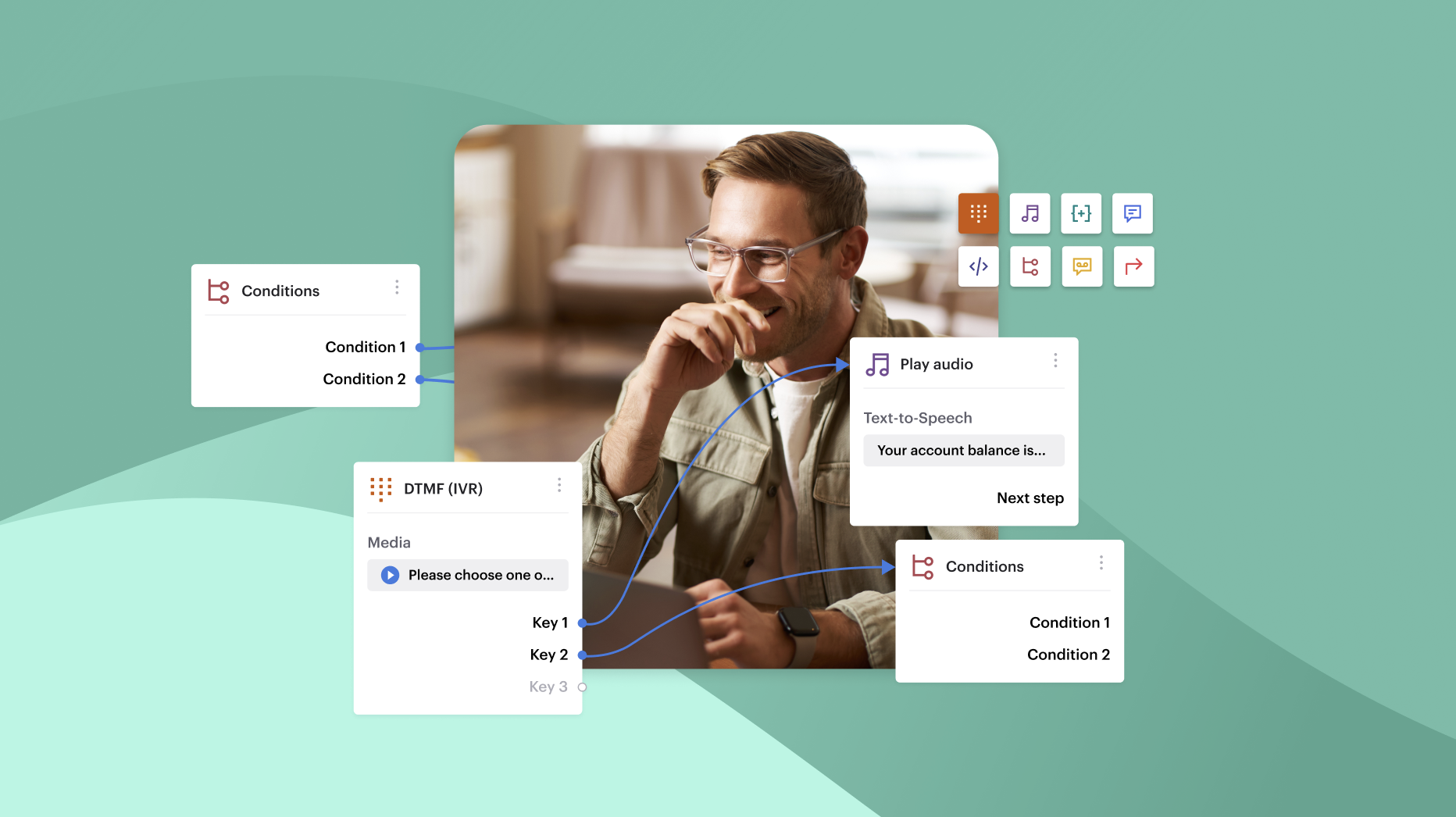

Live dashboards with KPI widgets let you track these across queues, so supervisors can respond early to instability instead of reacting after service levels have already dropped.

Automate data collection and reporting

Call data should be automatically logged and structured so your team can trust what they’re reviewing.

Call Detail Records (CDRs) give you structured data on:

- Call duration

- Wait time

- Hold time

- Call outcome

- Agent handling details

When you integrate CDRs with CRM systems like Salesforce, Zoho, or Freshdesk, calls can be logged automatically to matching contact or account records. This depends on your CRM configuration and the caller data available (for example, phone number matching), so it’s still worth auditing match rates and duplicate records over time.

This improves visibility into repeat contacts and customer history while reducing how often agents need to update records manually. Agents can still add notes, select dispositions, or correct associations when needed.

Integrate AI-powered speech analytics

Operational metrics tell you what happened. Post-call analytics can help explain why.

Speech analytics generates call transcripts and tracks keywords across conversations. That lets supervisors:

- Identify recurring topics

- Spot frequently mentioned issues

- Review talk ratios

- Score calls for quality monitoring

Voiso’s Speech Analytics supports transcript generation, keyword tracking, and call scoring for structured QA review. These insights come after the call, but they’re where you find recurring themes that affect resolution quality, customer experience, and QA review against internal guidelines.

Centralize multi-channel data

Inbound demand doesn’t arrive through voice alone anymore. Customers reach out via voice, SMS, WhatsApp, and chat.

When you track channels separately, performance data gets fragmented. Service levels might look stable in voice while digital queues grow unchecked.

Unified reporting across channels lets you:

- Track interaction volume across channels

- Monitor service levels and SLA targets across queues

- Spot channel shift trends

- Compare resolution patterns by channel

You need an omnichannel workspace that handles centralized interaction management and reporting, so your team maintains visibility no matter how customers reach you.

Maintain clean routing and IVR logic

Your metrics are only reliable if the call flow behind them is structured properly.

Poor routing logic inflates transfers, handle time, and abandonment. Rule-based IVR paths and queue configurations should reflect actual call intent. If calls are consistently bouncing between queues, the problem may not be agent performance, it may be routing design.

Review your flow configuration regularly to make sure routing rules still match real call patterns.

Setting realistic benchmarks without distorting behavior

Poorly set targets push teams to chase numbers instead of solving problems.

Understand industry standards (carefully)

Industry benchmarks can be a useful starting point, but they vary widely by sector, queue type, and call complexity. If you use external targets, treat them as directional and validate them against your own baselines by queue.

Customize metrics to your business goals

Targets should reflect the experience you want to deliver.

If your brand promises fast access, Service Level and ASA carry more weight. If your priority is issue resolution, FCR matters more than handle time.

Call complexity matters too. Reducing AHT in a high-complexity queue can damage resolution quality. In that case, a slightly longer handle time is worth it if repeat contacts go down.

Benchmarks should support outcomes without creating pressure that just moves problems somewhere else.

Track trends, not snapshots

Daily performance is noisy. A single spike in abandonment or AHT doesn’t always mean something structural is wrong.

What matters more is direction:

- Is FCR improving month over month?

- Is occupancy staying elevated for sustained periods?

- Is service level holding during peak hours?

Trend analysis helps you separate temporary blips from real systemic friction. Review benchmarks regularly and adjust them when call patterns, staffing levels, or customer expectations shift.

Common mistakes that quietly erode performance

Tracking metrics is necessary. But the way you use them can either strengthen the operation or quietly damage it.

The most common mistake is chasing low AHT without context. AHT is easy to measure, which makes it tempting to optimize. But pushing agents to end calls faster often reduces FCR. Customers leave without a clear resolution, then call back. That repeat contact increases total workload and queue pressure. It hurts CSAT too: customers can tell when they’re being rushed off the phone. Speed matters, but only when resolution quality stays stable. A slightly longer call that prevents a callback is almost always more efficient than a short call that creates one.

The second is keeping KPIs locked in management dashboards. If agents don’t understand what the metrics mean and why they matter, performance becomes mechanical. They cut handle time without understanding the FCR tradeoff. They avoid necessary transfers just to protect a metric. When agents understand how resolution reduces volume, how occupancy affects fatigue, and how service level affects customer wait time, they make better decisions on every call. Metrics work better when they’re shared and explained.

The third is simpler: tracking too many metrics at once. When you give dozens of KPIs equal weight, focus gets diluted. Most inbound teams can run effectively with FCR, AHT, Service Level or ASA, Abandonment Rate, CSAT, and Occupancy. Other metrics can support deeper analysis, but these tell the main story.

Putting it together

The short version: pick your core metrics (FCR, AHT, Service Level or ASA, Abandonment Rate, CSAT, and Occupancy), configure reporting by queue instead of relying on global averages, standardize your wrap-up codes, and review trends weekly. Then use what you find to adjust staffing and routing.

That’s the whole framework. The hard part isn’t knowing what to measure, it’s building the discipline to review it consistently and act on what the data tells you, even when the surface numbers look fine.

If you’re evaluating tooling to support this, Voiso’s platform is built around this kind of structured inbound reporting: real-time dashboards, Speech Analytics for post-call QA, CDR integration, and omnichannel visibility. See how it works.